In prep – psp.

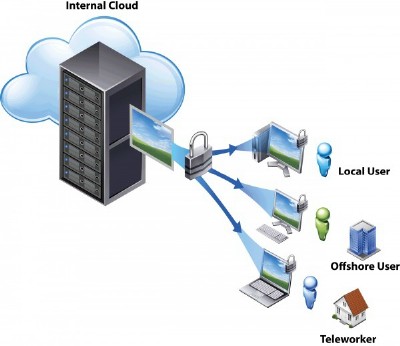

The subject of the LRS meeting on 25th February was that mysterious place where we are told we shall be archiving all our digital “stuff” in the future. LRS President Andy Sinclair MM0FMF talked about The Cloud, what it is, why it’s used and whether it will rain all over your data. Not a simple subject!

The Cloud – the one we are talking about is not quite like this one!

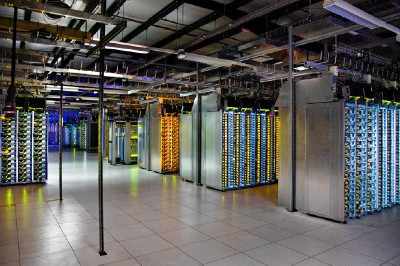

More like a number of Data Centres like this!

In this equipment, every yellow light is a server containing up to 32 CPU cores.

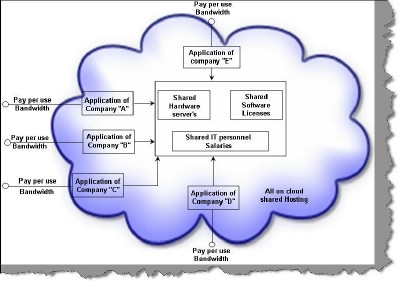

What is it’s most important characteristic? Cost Effectiveness!

But using the Cloud means that you must BACK UP all your files! (Just in case …).

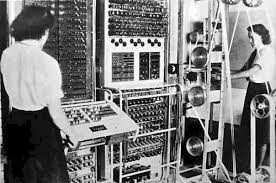

First a bit of computer history.

The first computer was Colossus, used for codebreaking at Bletchley Park during WWII.

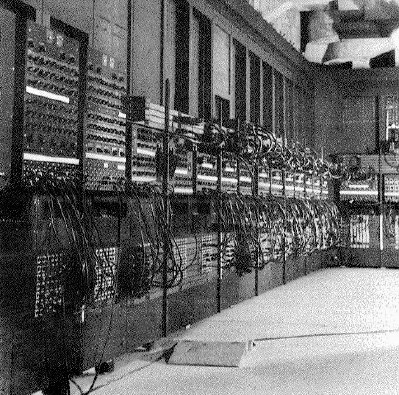

In the USA the ENIAC computer was developed for ballistics calculations.

In 1961 the IBM 7090 was used successfully for space travel calculations.

The 7090 was a solid-state version of the valved 709 and provided exactly the same facility.

It cost $63k per month to rent and as such had to be run 24/7 to be cost-effective.

In 1967 the IBM 360 was the first effective scientific / business computer.

NASA bought 5 for the Apollo calculations to put men on the moon.

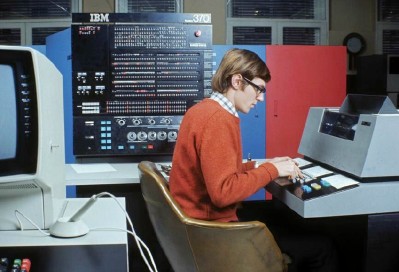

The IBM 370 in 1970 was bigger / better / faster than the 360.

It was able to run all 360 software, the first example of backwards-compatibility.

The main problem with mainframe computers was that they were large and expensive.

So mini-computers such as the PDP-11 were developed in 1970 ($35k vs $5m for an IBM 370).

Bell Labs introduced them for lab and factory use, running on Unix software.

Then came “microprocessors” such as the IBM-PC.

The computers were all networked together on the Internet, providing worldwide connectivity.

The growth of the Internet was explosive, with X-box / Playstation games, on-demand movies and all sorts of other “stuff”. Andy estimates there are now over 23 billion websites (and counting)!

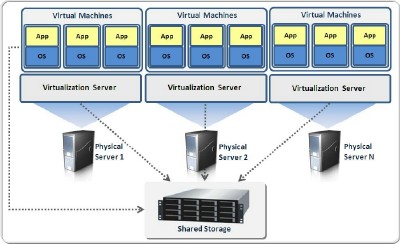

How many servers are needed? Too few and you have insufficient capacity; too many and you’re wasting money. Virtualization was the answer – making one computer pretend to be lots of small ones (because they are so fast now). This is a Virtualization Server, providing shared storage.

But I still don’t know how many servers are required!

Brainwave – the answer is to sell elastic chunks of capacity!

sell

sell

The Cloud provides elastic computing, dynamically.

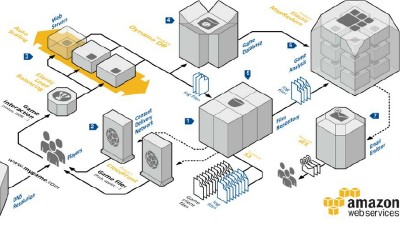

Amazon Web Services (AWS) built a scaleable system for web storage and sells access to others.

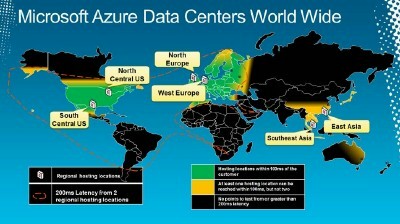

Microsoft Azure was developed after Windows started losing market share.

It provides worldwide Data Centres providing concentrations of data.

Like these!

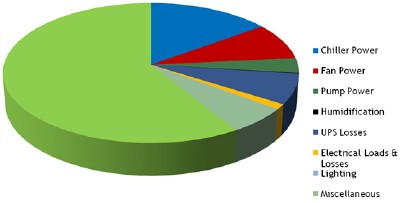

A practical difficulty in running such Data Centres is the HEAT that they generate which must be removed.

The majority of the heat comes from the CPUs but there are several other sources.

A lot of cooling pipes can be required!

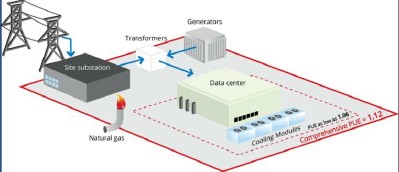

Data Cntre and its power facility

This is a Data Centre park.

And in the event of a power failure, a massive battery back-up is required,

along with the ability to pump all the stored data to another Data Centre.

Power flow in a Data Centre.

The Cloud? – it started about this big but it growed!